Supermicro X14 Gaudi® 3 AI Training and Inference Platform

Bringing choice to the enterprise AI market, the new Supermicro X14 AI training platform is built on the third generation Intel® Gaudi 3 accelerators, designed to further increase the efficiency of large-scale AI model training and AI inferencing. Available in both air-cooled and liquid-cooled configurations, Supermicro's X14 Gaudi 3 solution easily scales to meet a wide range of AI workload requirements.

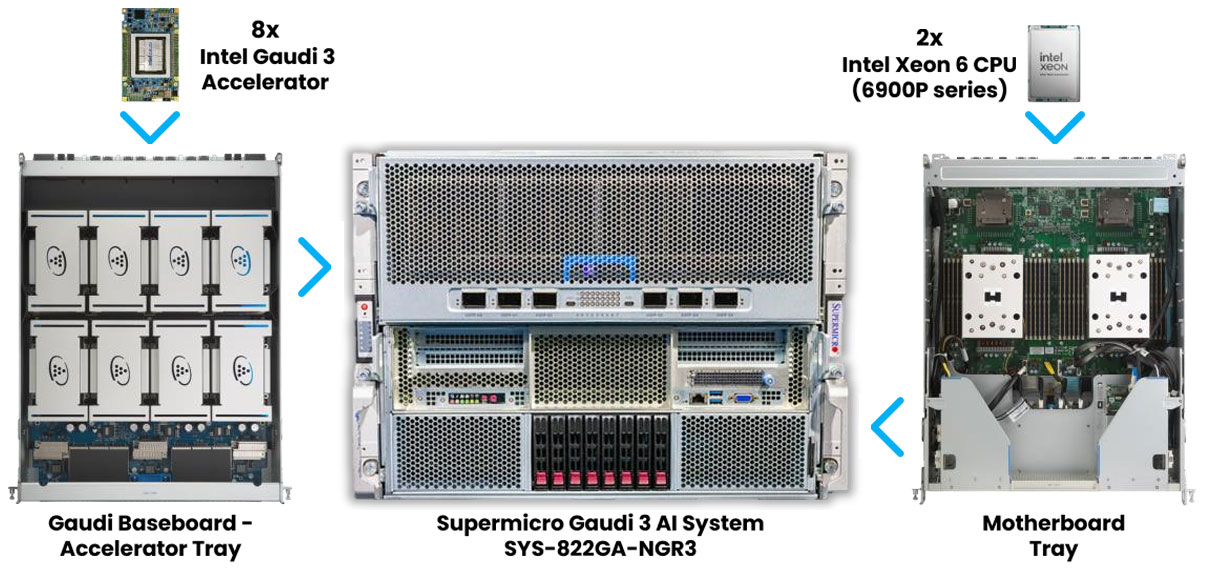

- GPU: 8 Gaudi 3 HL-325L (air-cooled) or HL-335 (liquid-cooled) accelerators on OAM 2.0 baseboard

- CPU: Dual Intel® Xeon® 6 processors

- Memory: 24 DIMMs - up to 6TB memory in 1DPC

- Drives: Up to 8 hot-swap PCIe 5.0 NVMe

- Power Supplies: 8 3000W high efficiency fully redundant (4+4) Titanium Level

- Networking: 6 on-board OSFP 800GbE ports for scale-out

- Expansion Slots: 2 PCIe 5.0 x16 (FHHL) + 2 PCIe 5.0 x8 (FHHL)

- Workloads: AI Training and Inference

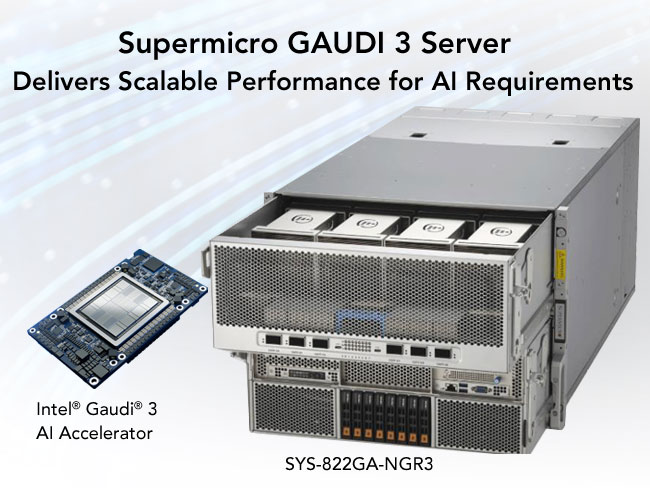

Intel® Gaudi® 3 Server (Dual Intel® Xeon® 6900 series processors with P-cores): SYS-822GA-NGR3

The Intel Gaudi 3 is a powerful AI accelerator designed to handle demanding training and inference workloads. It's part of Intel's Gaudi platform, which aims to provide a high-efficiency solution for enterprise AI applications. The key features and benefits of the Gaudi 3:

- Exceptional performance: Delivers impressive performance for training and inference of large language models (LLMs) and other AI models.

- Scalability: Supports flexible networking based on open, industry-standard Ethernet, allowing for efficient scaling of systems to meet the needs of demanding AI workloads.

- Ease of use: Provides a user-friendly development platform and is supported by Intel Developer Cloud, simplifying the process of building and deploying AI applications and reducing costs associated with open-source software.

- Networking capabilities: Features integrated 6 onboard OSFP 800GbE ports for massive scale-out networking. The Supermicro Gaudi 3 server is the only one in the industry powered by Intel Xeon 6900 series processors with P-Cores.

Bringing choice to the enterprise AI market, the new Supermicro X14 AI training platform is built on the third generation Intel® Gaudi 3 accelerators, designed to further increase the efficiency of large-scale AI model training and AI inferencing. Available in both air-cooled and liquid-cooled configurations, Supermicro's X14 Gaudi 3 solution easily scales to meet a wide range of AI workload requirements.

Gaudi® 3 AI Accelerator Overview

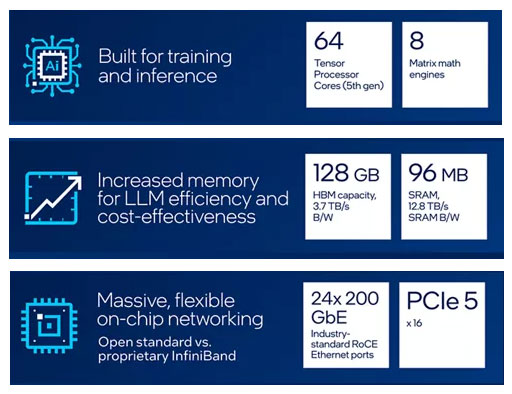

AI applications increasingly demand faster and more energy-efficient hardware solutions, and the Intel® Gaudi® 3 AI Accelerator was designed to answer the demand. With more than 2x FP8 GEMM FLOPs and more than 4x BF16 GEMM FLOPs compared to the Intel® Gaudi® 2 AI Accelerator, Intel® Gaudi® 3 AI Accelerator continues to provide state-of-the-art AI training performance. With 1.5x faster HBM bandwidth and 1.33x larger HBM capacity, the Intel® Gaudi® 3 AI Accelerator provides an order-of-magnitude improvement in large language model inference performance compared to the Intel® Gaudi® 2 AI Accelerator.

The Intel® Gaudi® 3 AI Accelerator features two identical compute dies, connected through a high-bandwidth, low-latency interconnect over an interposer bridge. The die-to-die connection is transparent to the software, providing performance and behavior equivalent to that of a large unified single die.

The Intel® Gaudi® 3 AI Accelerator compute architecture is heterogeneous and includes two main compute engines – a Matrix Multiplication Engine (MME) and a fully programmable Tensor Processor Core (TPC) cluster. The MME is responsible for doing all operations that can be lowered to Matrix Multiplication, like fully connected layers, convolutions and batched-GEMMs. The TPC, a Very Long Instruction Word (VLIW) Single-Instruction Multiple-Data (SIMD) processor tailor-made for deep learning applications, is used to accelerate all non-GEMM operations.

Supermicro X14 Gaudi® 3

AI Training Server

Supermicro's X14 purpose-built AI training platform is the industry's first Gaudi® 3 system powered by Intel® Xeon® 6 processors.

In this video, Supermicro's Sr. Director of Technology Enablement Thomas Jorgensen explains how X14 and Gaudi® 3 are bringing choice and flexibility to the enterprise AI market.

Supermicro Gaudi®2 AI Training Server

For Advanced Training and Inference Performance

With a leap in deep learning training and inference performance while preserving cost-efficiency. Supermicro Gaudi®2 AI Training Server SYS-820GH-TNR2.

- Featuring eight Habana® Gaudi®2 AI Training Processors

- Dual 3rd Gen Intel® Xeon® Scalable processors

- Expanded networking capacity with 24x 100 Gb RoCE ports integrated onto every Gaudi2

- 700 GB/second scale within the server and 2.4TB/second scale out

- Ease of system build or migration with Habana® SynapseAI® Software Stack