ARS-221GL-NR

DP NVIDIA Grace Superchip Server with up to 2 L40S or 2 single non-bridged NVIDIA H100 NVL

- High density 2U GPU system with up to 2 NVIDIA® PCIe GPUs

- PCIe-based NVIDIA H100 NVL and NVIDIA L40s

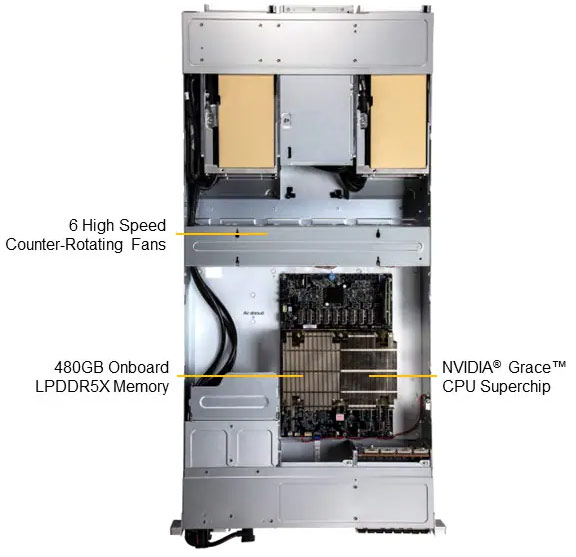

- Energy-Efficient NVIDIA Grace™ CPU Superchip with 144 Cores

- 480GB or 240GB LPDDR5X onboard memory option for minimum latency and maximum power efficiency

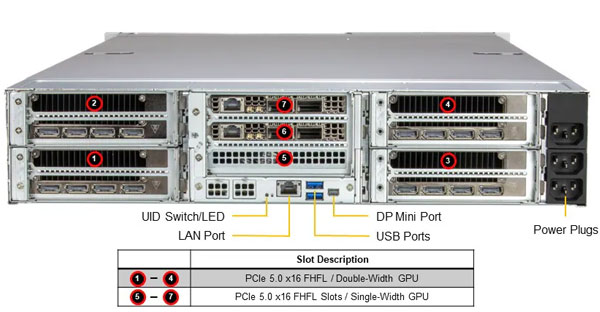

- 7 PCIe 5.0 x16 FHFL Slots

- NVIDIA BlueField-3 Data Processing Unit Support for the most demanding accelerated computing workloads.

- E1.S NVMe Storage Support

Key Applications

- High Performance Computing

- Large Language Model (LLM) Natural Language Processing

- AI/Deep Learning Training

- General purpose CPU workloads, including analytics, data science, simulation, application servers

Product Specification

| Product SKU | ARS-221GL-NR |

| Motherboard | Super G1SMH |

| Processor | |

| CPU | NVIDIA Grace™ CPU Superchip |

| Core Count | Up to 144C/288T |

| Note | Supports up to 500W TDP CPUs (Air Cooled) |

| GPU | |

| Max GPU Count | Up to 4 double-width GPU(s) |

| Supported GPU | NVIDIA PCIe: H100 NVL, L40S |

| CPU-GPU Interconnect | PCIe 5.0 x16 CPU-to-GPU Interconnect |

| GPU-GPU Interconnect | NVIDIA® NVLink™ Bridge (optional) |

| System Memory | |

| Memory | Slot Count: Onboard Memory Max Memory: Up to 480GB ECC LPDDR5X |

| Memory Voltage | 1.1 V |

| On-Board Devices | |

| Chipset | System on Chip |

| Network Connectivity | 1x 10GbE BaseT with NVIDIA ConnectX®-7 or Bluefield®-3 DPU |

| IPMI | Support for Intelligent Platform Management Interface v.2.0 IPMI 2.0 with virtual media over LAN and KVM-over-LAN support |

| Input / Output | |

| Video | 1 VGA port(s) |

| System BIOS | |

| BIOS Type | AMI 32MB SPI Flash EEPROM |

| Management | |

| Software | OOB Management Package (SFT-OOB-LIC ) Redfish API IPMI 2.0 SSM Intel® Node Manager SPM KVM with dedicated LAN SUMNMI Watch Dog SuperDoctor® 5 |

| Power Configurations | ACPI Power Management Power-on mode for AC power recovery |

| PC Health Monitoring | |

| CPU | 8+4 Phase-switching voltage regulator Monitors for CPU Cores, Chipset Voltages, Memory |

| FAN | Fans with tachometer monitoring Pulse Width Modulated (PWM) fan connector Status monitor for speed control |

| Temperature | Monitoring for CPU and chassis environment Thermal Control for fan connectors |

| Chassis | |

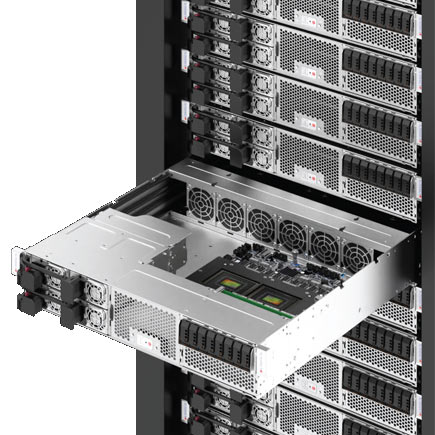

| Form Factor | 2U Rackmount |

| Model | CSE-GP201TS-R000NP |

| Dimensions and Weight | |

| Height | 3.46" (88mm) |

| Width | 17.25" (438.4mm) |

| Depth | 35.43" (900mm) |

| Package | 11" (H) x 22.5" (W) x 45.5" (D) |

| Weight | Net Weight: 67.5 lbs (30.6 kg) Gross Weight: 86.5 lbs (39.2 kg) |

| Available Color | Black front & silver body |

| Front Panel | |

| Buttons | Power On/Off buttonSystem Reset button |

| Expansion Slots | |

| PCI-Express (PCIe) | 7 PCIe 5.0 x16 FHFL slot(s) |

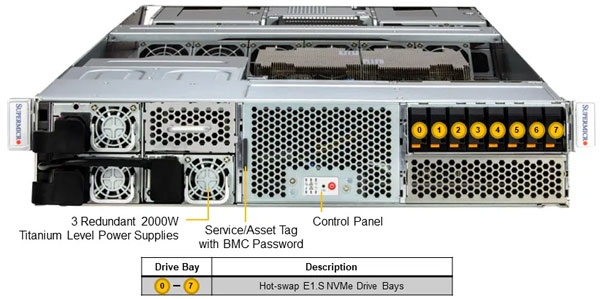

| Drive Bays / Storage | |

| Hot-swap | 8x E1.S hot-swap NVMe drive slots |

| System Cooling | |

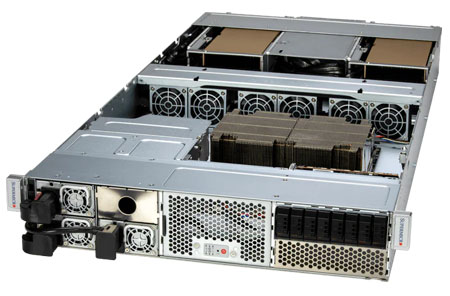

| Fans | 6 heavy duty fans with optimal fan speed control |

| Power Supply | 2000W Redundant Titanium Level power supplies |

| Operating Environment | |

| Environmental Spec. | Operating Temperature: 10°C ~ 35°C (50°F ~ 95°F) Non-operating Temperature: -40°C to 60°C (-40°F to 140°F) Operating Relative Humidity: 8% to 90% (non-condensing) Non-operating Relative Humidity: 5% to 95% (non-condensing) |

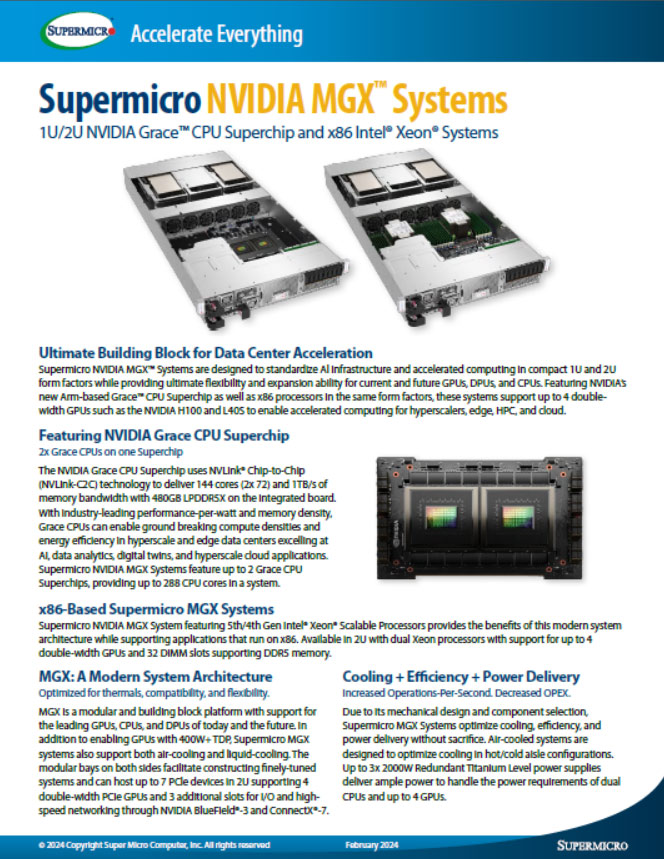

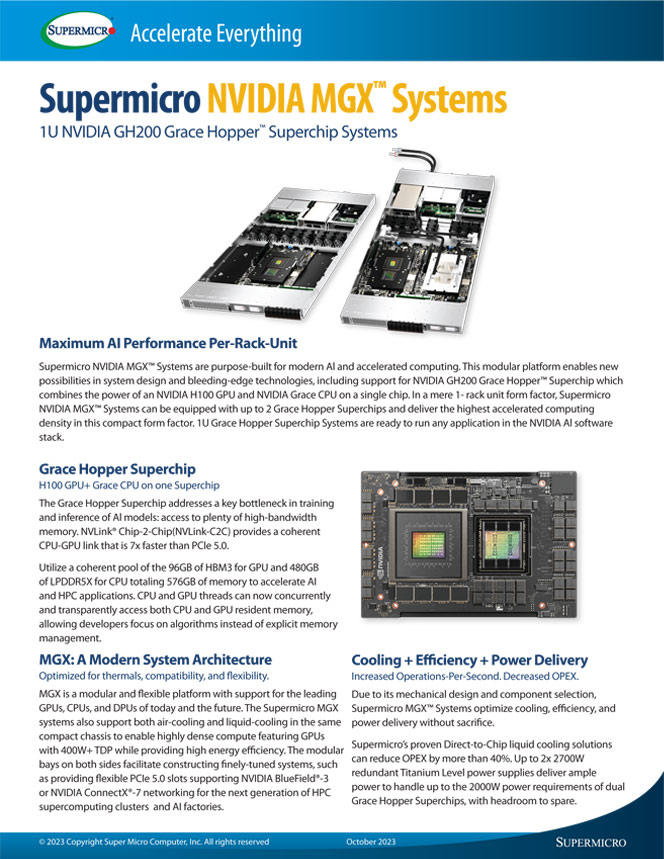

Enterprise AI Inferencing & Training System

2U Grace MGX System

Modular Building Block Platform with Energy-efficient Grace CPU Superchip

Benefits & Advantages

- Two NVIDIA Grace CPUs on one Superchip with 144-core and up to 500W CPU TDP

- 900GB/s NVLink® Chip-2-Chip (C2C) highbandwidth and low-latency interconnect between Grace CPUs

- NVIDIA MGX reference design enabling to construct a wide array of platforms and configurations

- 7 PCIe 5.0 x16 slots in 2U with up to 4 PCIe FHFL DW GPUs and 3 NICs or DPUs

Key Features

- Up to 144 high-performance Arm Neoverse V2 Cores with up to 960GB LPDDR5X onboard memory

- Up to 4 H100 PCIe GPUs with optional NVLink Bridge (H100 NVL), L40S, or L40 between Grace CPUs

- Up to 3 NVIDIA ConnectX-7 400G NDR InfiniBand cards or 3 NVIDIA BlueField®-3 cards

- 8 hot-swap E1.S and 2 M.2 slots Front I/O and Rear I/O configuration

Grace and x86 MGX System Configurations at a Glance

Supermicro NVIDIA MGX™ 1U/2U Systems with Grace™ CPU Superchip and x86 CPUs are fully optimized to support up to 4 GPUs via PCle without sacrificing I/O networking, or thermals. The ultimate building block architecture allows you to tailor these systems optimized for a variety of accelerated workloads and fields, including Al training and inference, HPC, data analytics, visualization/Omniverse™, and hyperscale cloud applications.

| SKU | ARS-121L-DNR | ARS-221GL-NR | SYS-221GE-NR |

|  |  | |

| Form Factor | 1U 2-node system with NVIDIA Grace CPU Superchip per node | 2U GPU system with single NVIDIA Grace CPU Superchip | 2U GPU system with dual x86 CPUs |

| CPU | 144-core Grace Arm Neoverse V2 CPU in a single chip per node (total of 288 cores in one system) | 144-core Grace Arm Neoverse V2 CPU in a single chip | 4th Gen Intel Xeon Scalable Processors (Up to 56-core per socket) |

| GPU | Please contact our sales for possible configurations | Up to 4 double-width GPUs including NVIDIA H100 PCIe, H100 NVL, L40S | Up to 4 double-width GPUs including NVIDIA H100 PCIe, H100 NVL, L40S |

| Memory | Up to 480GB of integrated LPDDR5X memory with ECC and up to 1TB/s of bandwidth per node | Up to 480GB of integrated LPDDR5X memory with ECC and up to 1TB/s of bandwidth per node | Up to 2TB, 32x DIMM slots, ECC DDR5-4800 |

| Drives | Up to 4x hot-swap E1.S drives and 2x M.2 NVMe drives per node | Up to 8x hot-swap E1.S drives and 2x M.2 NVMe drives | Up to 8x hot-swap E1.S drives and 2x M.2 NVMe drives |

| Networking | 2x PCIe 5.0 x16 slots per node supporting NVIDIA BlueField-3 or ConnectX-7 (e.g., 1 GPU and 1 BlueField-3) | 3x PCIe 5.0 x16 slots supporting NVIDIA BlueField-3 or ConnectX-7 (in addition to 4x PCIe 5.0 x16 slots for GPUs) | 3x PCIe 5.0 x16 slots supporting NVIDIA BlueField-3 or ConnectX-7 (in addition to 4x PCIe 5.0 x16 slots for GPUs) |

| Interconnect | NVLink™-C2C with 900GB/s for CPU-CPU interconnect (within node) | NVLink Bridge GPU-GPU interconnect supported (e.g., H100 NVL) | NVLink™ Bridge GPU-GPU interconnect supported (e.g., H100 NVL) |

| Cooling | Air-cooling | Air-cooling | Air-cooling |

| Power | 2x 2700W Redundant Titanium Level power supplies | 3x 2000W Redundant Titanium Level power supplies | 3x 2000W Redundant Titanium Level power supplies |