ARS-111GL-DNHR-LCC

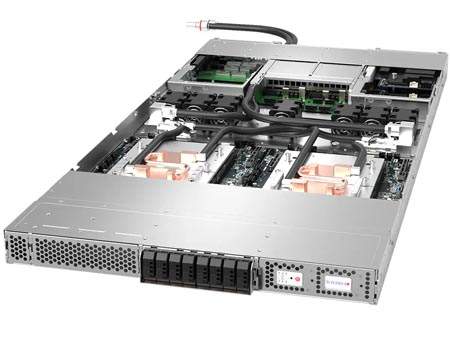

1U 2-Node NVIDIA GH200 Grace Hopper Superchip GPU Server with liquid-cooling supporting NVIDIA BlueField-3 / NVIDIA ConnectX-7

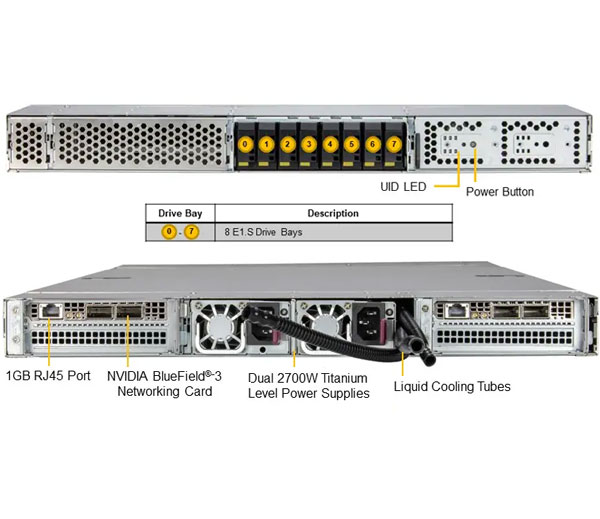

This system currently supports two E1.S drives direct to the processor and the onboard GPU only. Two nodes in a 1U form factor. Each node supports the following:

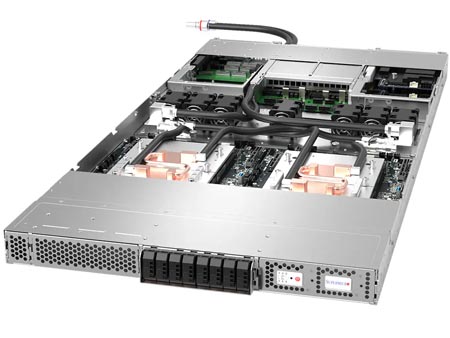

- High density 1U 2-node GPU system with Integrated NVIDIA® H100 GPU (1 per Node)

- NVIDIA Grace Hopper™ Superchip (Grace CPU and H100 GPU)

- NVLink® Chip-2-Chip (C2C) high-bandwidth, low-latency interconnect between CPU and GPU at 900GB/s

- Up to 576GB of coherent memory per node including 480GB LPDDR5X and 96GB of HBM3 for LLM applications

- 2x PCIe 5.0 x16 slots per node supporting NVIDIA BlueField®-3 or ConnectX®-7

- 7 Hot-Swap Heavy Duty Fans with Optimal Fan Speed Control

Key Applications

- High Performance Computing

- AI/Deep Learning Training and Inference

- Large Language Model (LLM) and Generative AI

| Product SKUs | ARS-111GL-DNHR-LCC (Silver) |

| Motherboard | Super G1SMH-G |

| Processor (per Node) | |

| CPU | NVIDIA GH200 Grace Hopper™ Superchip |

| Core Count | Up to 72C/144T |

| Note | Supports up to 2000W TDP CPUs (Liquid Cooled) |

| GPU (per Node) | |

| Max GPU Count | 1 onboard GPU(s) |

| Supported GPU | NVIDIA: H100 Tensor Core GPU on GH200 Grace Hopper™ Superchip (Liquid-cooled) |

| CPU-GPU Interconnect | NVLink®-C2C |

| GPU-GPU Interconnect | PCIe |

| System Memory (per Node) | |

| Memory | Slot Count: Onboard Memory Max Memory: Up to 480GB ECC LPDDR5X Additional GPU Memory: Up to 96GB ECC HBM3 |

| On-Board Devices (per Node) | |

| Chipset | System on Chip |

| Network Connectivity | 1x 1GbE BaseT with NVIDIA ConnectX®-7 or Bluefield®-3 DPU |

| Input / Output (per Node) | |

| LAN | 1 RJ45 1GbE (Dedicated IPMI port) |

| System BIOS | |

| BIOS Type | AMI 32MB SPI Flash EEPROM |

| PC Health Monitoring | |

| CPU | 8+4 Phase-switching voltage regulator Monitors for CPU Cores, Chipset Voltages, Memory |

| FAN | Fans with tachometer monitoring Pulse Width Modulated (PWM) fan connectors Status monitor for speed control |

| Temperature | Monitoring for CPU and chassis environment Thermal Control for fan connectors |

| Chassis | |

| Form Factor | 1U Rackmount |

| Model | CSE-GP102TS-R000NDFP |

| Dimensions and Weight | |

| Height | 1.75" (44mm) |

| Width | 17.33" (440mm) |

| Depth | 37" (940mm) |

| Package | 9.5" (H) x 48" (W) x 28" (D) |

| Weight | Net Weight: 48.5 lbs (22 kg) Gross Weight: 65.5 lbs (29.7 kg) |

| Available Color | Silver |

| Expansion Slots (per Node) | |

| PCI-Express (PCIe) | 2 PCIe 5.0 x16 FHFL slot(s) |

| Drive Bays / Storage (per Node) | |

| Hot-swap | 4x E1.S hot-swap NVMe drive slots |

| M.2 | 2 M.2 NVMe |

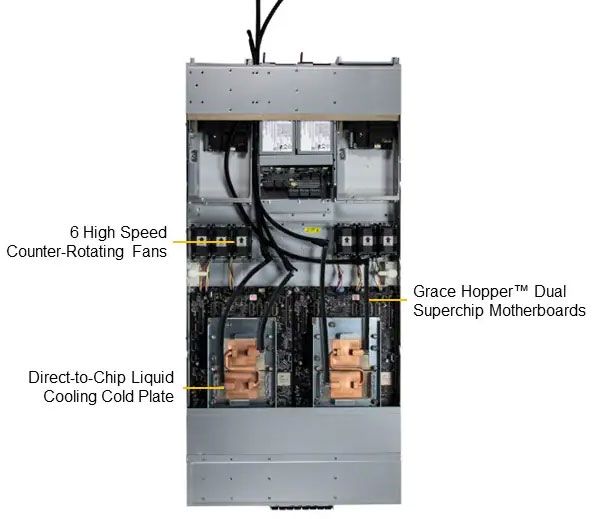

| System Cooling | |

| Fans | 7 Removable heavy-duty 4CM Fan(s) |

| Power Supply | 2x 2700W Redundant Titanium Level power supplies |

| Operating Environment | |

| Environmental Spec. | Operating Temperature: 10°C ~ 35°C (50°F ~ 95°F) Non-operating Temperature: -40°C to 60°C (-40°F to 140°F) Operating Relative Humidity: 8% to 90% (non-condensing) Non-operating Relative Humidity: 5% to 95% (non-condensing) |

Enterprise AI Inferencing & Training Server with Liquid Cooling + Efficiency + Power Delivery

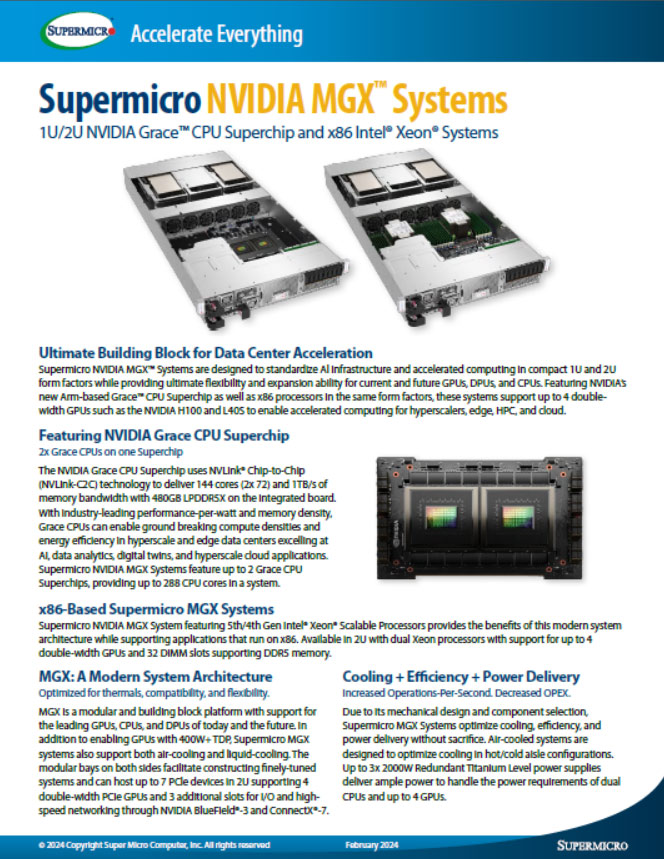

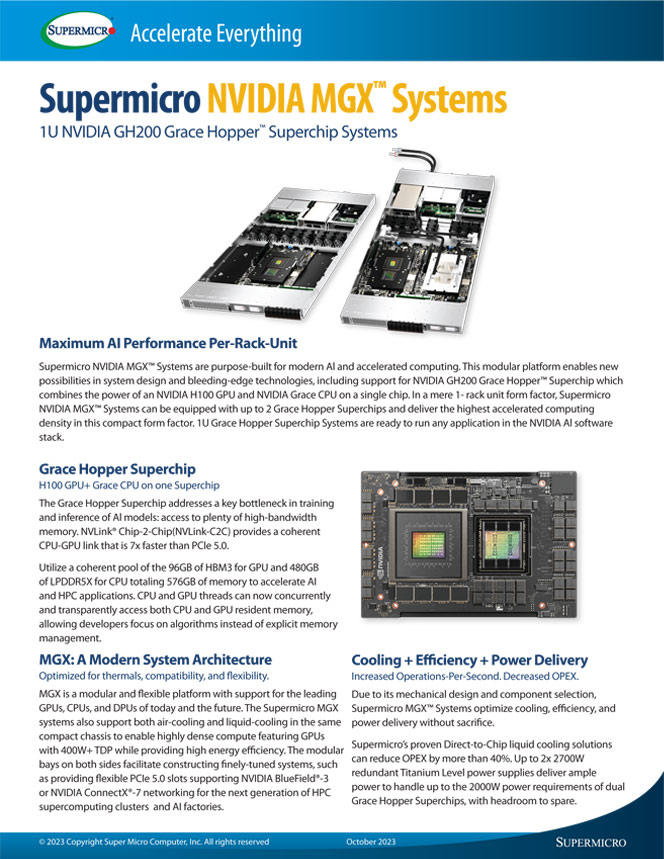

1U Grace Hopper MGX Systems

CPU+GPU Coherent Memory System for AI and HPC Applications

Due to its mechanical design and component selection, Supermicro MGX™ Systems optimize cooling, efficiency, and power delivery without sacrifice. Supermicro’s proven Direct-to-Chip liquid cooling solutions can reduce OPEX by more than 40%.

Up to 2x 2700W redundant Titanium Level power supplies deliver ample power to handle up to the 2000W power requirements of dual Grace Hopper Superchips, with headroom to spare.

Supermicro servers with the NVIDIA GH200 Grace Hopper Superchip

Construct new solutions for accelerated infrastructures enabling scientists and engineers to focus on solving the world’s most important problems with larger datasets, more complex models, and new generative AI workloads. Within the same 1U chassis, Supermicro’s dual NVIDIA GH200 Grace Hopper Superchip systems deliver the highest level of performance for any application on the CUDA platform with substantial speedups for Al workloads with high memory requirements. In addition to hosting up to 2 onboard H100 GPUs in 1U form factor, its modular bays enable full-size PCIe expansions for present and future of accelerated computing components, high-speed scale-out and clustering.

| SKU | ARS-221GL-NHIR | ARS-111GL-NHR | ARS-111GL-NHR-LCC | ARS-111GL-DNHR-LCC |

|  |  |  | |

| Form Factor | 2U Rackmount | 1U Rackmount | 1U Rackmount | 1U Rackmount |

| CPU | 72-core NVIDIA Grace CPU on GH200 Grace Hopper™ Superchip | 72-core NVIDIA Grace CPU on GH200 Grace Hopper™ Superchip | 72-core NVIDIA Grace CPU on GH200 Grace Hopper | 72-core NVIDIA Grace CPU on GH200 Grace Hopper™ Superchip |

| GPU |

|

|

|

|

| System Cooling | Air | Air | Air and liquid | Air and liquid |