AS-4125GS-TNHR2-LCC

DP AMD 4U Liquid-Cooled GPU Server, NVIDIA HGX H100/H200 8-GPU

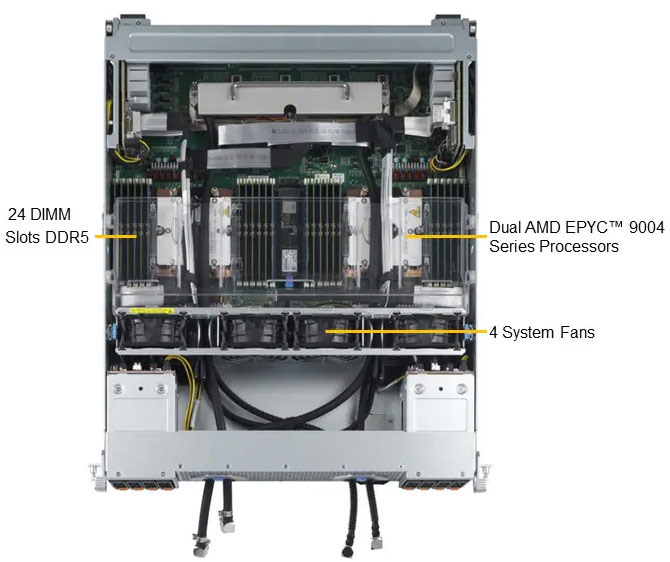

- Dual-Socket, AMD EPYC™ 9004 Series Processors

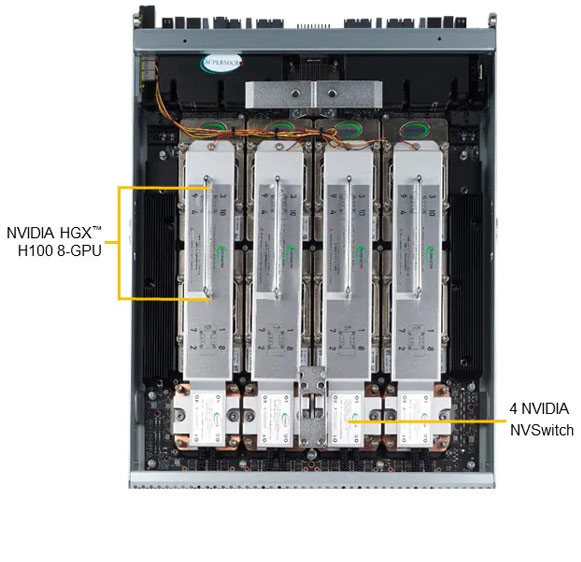

- High density 4U system with NVIDIA® HGX™ H100/H200 8-GPU

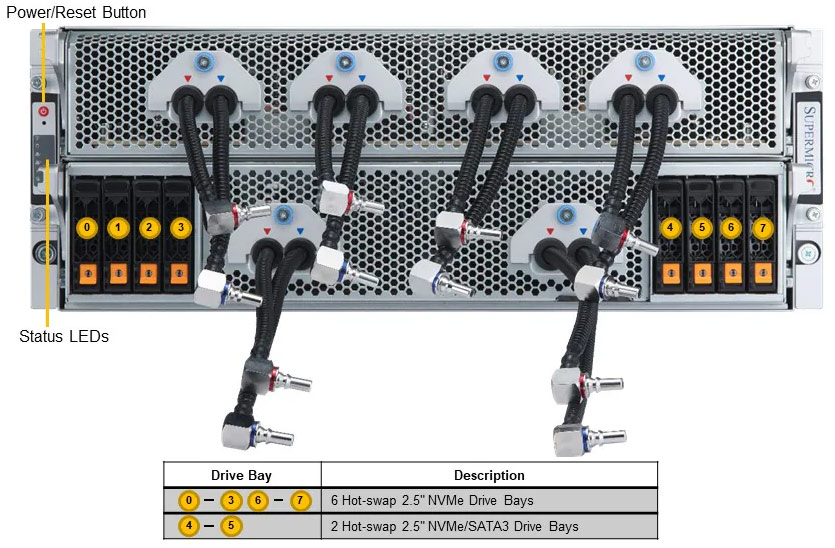

- 8 NVMe for NVIDIA GPUDirect Storage

- 8 NIC for NVIDIA GPUDirect RDMA (1:1 GPU Ratio)

- Highest GPU communication using NVIDIA® NVLink®

- 24 DIMM slots Up to 6TB: 4800 ECC DDR5

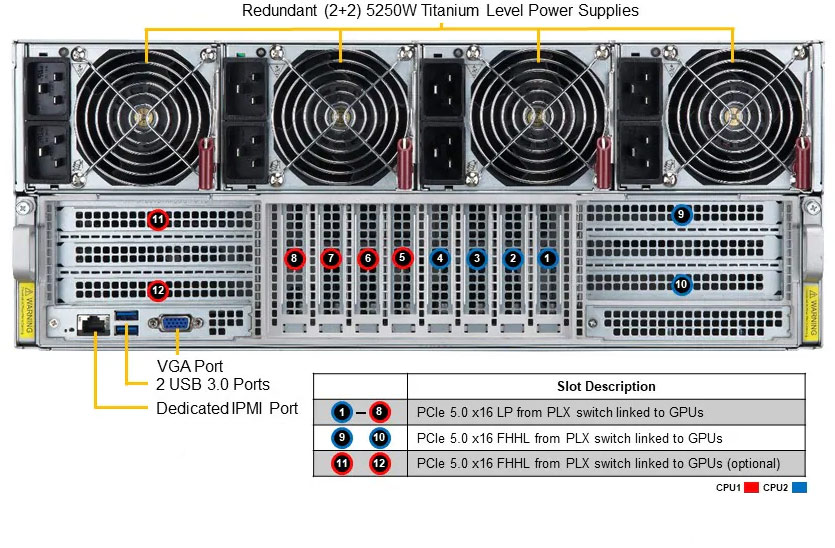

- 8 PCIe 5.0 x16 LP slots

- 2 PCIe 5.0 x16 FHHL slots, 2 PCIe 5.0 x16 FHHL slots (optional)

- 4x 5250W(2+2) Redundant Power Supplies, Titanium Level

Key Applications

- HPC

- Deep Learning/AI/Machine Learning Development

Datasheet

| Product SKUs | A+ Server AS -4125GS-TNHR2-LCC |

| Motherboard | Super H13DSG-OM |

| Processor | |

| CPU | Dual processor(s) AMD EPYC™ 9004/9005 Series Processors (* AMD EPYC™ 9005 Series drop-in support requires board revision 2.x) |

| Core Count | Up to 128C/256T |

| Note | Supports up to 400W TDP CPUs (Liquid Cooled) |

| GPU | |

| Max GPU Count | Up to 8 onboard GPU(s) |

| Supported GPU | NVIDIA SXM: HGX H100 8-GPU (80GB)), HGX H200 8-GPU (141GB) View GPU Options |

| CPU-GPU Interconnect | PCIe 5.0 x16 CPU-to-GPU Interconnect |

| GPU-GPU Interconnect | NVIDIA® NVLink® with NVSwitch™ |

| System Memory | |

| Memory | Slot Count: 24 DIMM slots Max Memory (1DPC): Up to 6TB 4800MT/s ECC DDR5 RDIMM/LRDIMM |

| On-Board Devices | |

| Chipset | AMD SP5 |

| IPMI | Support for Intelligent Platform Management Interface v.2.0 IPMI 2.0 with virtual media over LAN and KVM-over-LAN support |

| Input / Output | |

| LAN | 1 RJ45 1 GbE Dedicated IPMI LAN port(s) |

| USB | 2 USB 3.0 Type-A port(s) (Rear) |

| Video | 1 VGA port(s) |

| System BIOS | |

| BIOS Type | AMI 32MB SPI Flash EEPROM |

| Management | |

| Software | Redfish API Supermicro Server Manager (SSM) Supermicro Update Manager (SUM) SuperDoctor® 5 Super Diagnostics Offline KVM with dedicated LAN IPMI 2.0 |

| Power configurations | Power-on mode for AC power recovery ACPI Power Management |

| Security | |

| Hardware | Trusted Platform Module (TPM) 2.0 Silicon Root of Trust (RoT) – NIST 800-193 Compliant |

| Features | Cryptographically Signed Firmware Secure Boot Secure Firmware Updates Automatic Firmware Recovery Supply Chain Security: Remote Attestation Runtime BMC Protections System Lockdown |

| PC Health Monitoring | |

| CPU | Monitors for CPU Cores, Chipset Voltages, Memory 7 +1 Phase-switching voltage regulator |

| FAN | Fans with tachometer monitoring Status monitor for speed control |

| Temperature | Monitoring for CPU and chassis environment Thermal Control for fan connectors |

| Chassis | |

| Form Factor | 4U Rackmount |

| Model | CSE-GP401TS-R000NP |

| Dimensions and Weight | |

| Height | 6.85" (174 mm) |

| Width | 17.7" (449 mm) |

| Depth | 33.2" (842 mm) |

| Package | 13" (H) x 48" (W) x 26.4" (D) |

| Weight | Gross Weight: 138.89 lbs (63 kg) Net Weight: 80.03 lbs (53 kg) |

| Available Color | Silver |

| Front Panel | |

| Buttons | UID button |

| Expansion Slots | |

| PCI-Express (PCIe) Configuration | Default 8 PCIe 5.0 x16 (in x16) LP slot(s) 2 PCIe 5.0 x16 (in x16) FHHL slot(s) Option A* 8 PCIe 5.0 x16 (in x16) LP slot(s) 4 PCIe 5.0 x16 (in x16) FHHL slot(s) (*Requires additional parts.) |

| Drive Bays / Storage | |

| Drive Bays Configuration | Default: Total 8 bay(s)8 front hot-swap 2.5" NVMe drive bay(s) |

| M.2 | 1 M.2 NVMe slot(s) (M-key) |

| System Cooling | |

| Fans | 4x 8cm heavy duty fans with optimal fan speed control |

| Liquid Cooling | Direct to Chip (D2C) Cold Plate (optional) |

| Power Supply | 4x 5250W Redundant (2 + 2) Titanium (certification pending) Level power supplies |

| Dimension (WxHxL) | 106.5 x 82.1 x 245.3 mm |

| +12V | Max: 125A / Min: 0A (200Vac-240Vac) |

| AC Input | 5250W: 200-240Vac / 50-60Hz |

| Output Type | Backplanes (gold finger) |

| Operating Environment | |

| Environmental Spec. | Operating Temperature: 10°C ~ 35°C (50°F ~ 95°F) Non-operating Temperature: -40°C to 60°C (-40°F to 140°F) Operating Relative Humidity: 8% to 90% (non-condensing) Non-operating Relative Humidity: 5% to 95% (non-condensing) |

Supermicro NVIDIA HGX H100/H200

8-GPU Servers

Large-Scale AI applications demand greater computing power, faster memory bandwidth, and higher memory capacity to handle today's AI models, reaching up to trillions of parameters. Supermicro NVIDIA HGX 8-GPU Systems are carefully optimized for cooling and power delivery to sustain maximum performance of the 8 interconnected H100/H200 GPUs.

Supermicro NVIDIA HGX Systems are designed to be the scalable building block for AI clusters: each system features 8x 400G NVIDIA BlueField®-3 or ConnectX-7 NICs for a 1:1 GPU-to-NIC ratio with support for NVIDIA Spectrum-X Ethernet or NVIDIA Quantum-2 InfiniBand. These systems can be deployed in a full turn-key Generative AI SuperCluster, from 32 nodes to thousands of nodes, accelerating time-to-delivery of mission-critical AI infrastructure.

Generative AI SuperCluster

The full turn-key data center solution accelerates time-to-delivery for mission-critical enterprise use cases, and eliminates the complexity of building a large cluster, which previously was achievable only through the intensive design tuning and time-consuming optimization of supercomputing.

Highest Density Datasheet

With 32 NVIDIA HGX H100/H200 8-GPU, 4U Liquid-cooled Systems (256 GPUs) in 5 Racks

Key Features

- Doubling compute density through Supermicro’s custom liquid-cooling solution with up to 40% reduction in electricity cost for data center

- 256 NVIDIA H100/H200 GPUs in one scalable unit

- 20TB of HBM3 with H100 or 36TB of HBM3e with H200 in one scalable unit

- 1:1 networking to each GPU to enable NVIDIA GPUDirect RDMA and Storage for training large language model with up to trillions of parameters

- Customizable AI data pipeline storage fabric with industry leading parallel file system options

- NVIDIA AI Enterprise Software Ready

Compute Node

Compute Node

4U Liquid-Cooled NVIDIA HGX H100/H200 8-GPU System

Highest density and efficiency with D2C liquid cooling for both GPUs and CPUs to optimize performance and energy cost

- GPU: NVIDIA HGX H100/H200 8-GPU with up to 141GB HBM3e memory per GPU

- GPU-GPU Interconnect: 900GB/s GPU-GPU NVLink interconnect with 4x NVSwitch – 7x better performance than PCIe

- CPU: Dual AMD EPYC™ 9004 series processors

- Memory: Up to 32 DIMM slots: 8TB DDR5-5600

- Storage: Up to 16x 2.5" Hot-swap NVMe drive bays

- NIC: High-performance 400 Gb/s NVIDIA BlueField®-3 or NVIDIA ConnectX®-7

- Networking: Scale out with NVIDIA Quantum-2 InfiniBand or NVIDIA Spectrum-X™ Ethernet

- Direct-to-chip liquid cooling to support CPUs up to 350W and GPUs up to 700W