SYS-821GE-TNHR

DP Intel 8U GPU Server with NVIDIA HGX H100/H200 8-GPU and Rear I/O

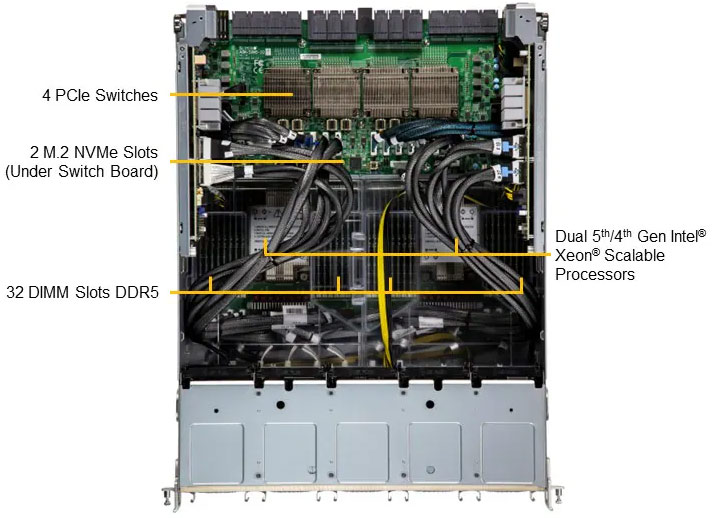

- 5th/4th Gen Intel® Xeon® Scalable processor support

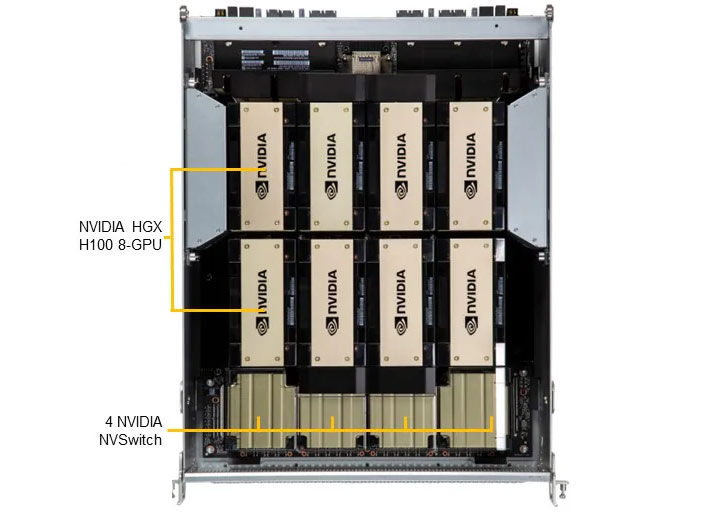

- Support for NVIDIA HGX™ H100/H200 8-GPU

- 32 DIMM slots Up to 8TB: 32x 256 GB DRAM Memory Type: 5600MTs ECC DDR5

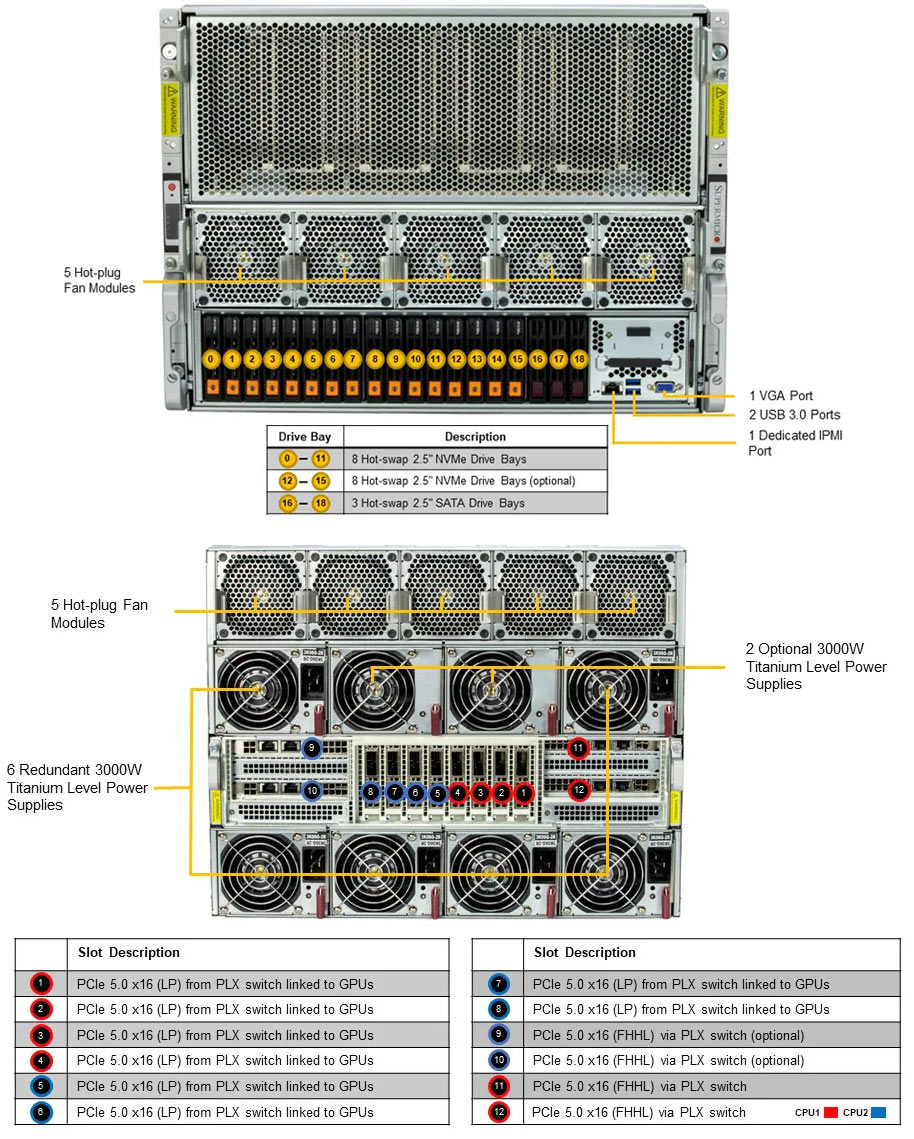

- 8 PCIe Gen 5.0 X16 LP

2 PCIe Gen 5.0 X16 FHHL Slots, 2 PCIe Gen 5.0 X16 FHHL Slots (optional) - Flexible networking options

- 2 M.2 NVMe for boot drive only

16x 2.5" Hot-swap NVMe drive bays (12x by default, 4x optional)

3x 2.5" Hot-swap SATA drive bays

Optional: 8x 2.5" Hot-swap SATA drive bays - 10 heavy duty fans with optimal fan speed control

- Optional: 8x 3000W (4+4) Redundant Power Supplies, Titanium Level

6x 3000W (4+2) Redundant Power Supplies, Titanium Level

Key Applications

- High Performance Computing

- AI/Deep Learning Training

- Industrial Automation, Retail

- Conversational AI

- Business Intelligence & Analytics

- Drug Discovery

- Climate and Weather Modelling

- Finance & Economics

Supermicro Ready-to-Ship Gold Series Pre-Configured Server

Best-Selling Server Platforms, Pre-Configured with Key Components for Reduced Lead Times

Model: SYS-821GE-TNHR-G1

- CPU: 2x Intel® Xeon® Platinum 8570 (56Core/2.1GHz)

- GPU: 1x NVIDIA HGX™ H200 8-GPU

- Memory: 2TB DDR5-5600

- M.2: 2x 960GB M.2 NVMe SSD

- Networking: 8x Single 400G NDR/ETH OSFP

1x Dual 200G NDR200/ETH QSFP112 - Power Supplies: 6x 3000W Titanium Level

| Product SKUs | SuperServer SYS-821GE-TNHR SuperServer SYS-821GE-TNHR-G1 (Gold Series version with pre-configured components) |

| Motherboard | Super X13DEG-OAD |

| Processor | |

| CPU | Dual Socket E (LGA-4677) 5th Gen Intel® Xeon® / 4th Gen Intel® Xeon® Scalable processors |

| Core Count | Up to 64C/128T; Up to 320MB Cache per CPU |

| Note | Supports up to 350W TDP CPUs (Air Cooled) |

| GPU | |

| Max GPU Count | 8 onboard GPUs |

| Supported GPU | NVIDIA SXM: HGX H100 8-GPU (80GB), HGX H200 8-GPU (141GB) |

| CPU-GPU Interconnect | PCIe 5.0 x16 CPU-to-GPU Interconnect |

| GPU-GPU Interconnect | NVIDIA® NVLink® with NVSwitch™ |

| System Memory | |

| Memory | Slot Count: 32 DIMM slots Max Memory (1DPC): Up to 4TB 5600MT/s ECC DDR5 RDIMM Max Memory (2DPC): Up to 8TB 4400MT/s ECC DDR5 RDIMM |

| Memory Voltage | 1.1V |

| On-Board Devices | |

| Chipset | Intel® C741 |

| Network Connectivity | 2 RJ45 10GbE with Intel® X550-AT2 (optional) 2 SFP28 25GbE with Broadcom® BCM57414 (optional) 2 RJ45 10GbE with Intel® X710-AT2 (optional) |

| Input / Output | |

| Video | 1 VGA port |

| System BIOS | |

| BIOS Type | AMI 32MB SPI Flash EEPROM |

| Management | |

| Software | SuperCloud Composer® Supermicro Server Manager (SSM) Supermicro Update Manager (SUM) Supermicro SuperDoctor® 5 (SD5) Super Diagnostics Offline (SDO) Supermicro Thin-Agent Service (TAS) SuperServer Automation Assistant (SAA) New! |

| Power configurations | Power-on mode for AC power recovery ACPI Power Management |

| Security | |

| Hardware | Trusted Platform Module (TPM) 2.0 Silicon Root of Trust (RoT) – NIST 800-193 Compliant |

| Features | Cryptographically Signed Firmware Secure Boot Secure Firmware Updates Automatic Firmware Recovery Supply Chain Security: Remote Attestation Runtime BMC Protections System Lockdown |

| PC Health Monitoring | |

| CPU | Monitors for CPU Cores, Chipset Voltages, Memory 8+4 Phase-switching voltage regulator |

| FAN | Fans with tachometer monitoring Status monitor for speed control Pulse Width Modulated (PWM) fan connectors |

| Temperature | Monitoring for CPU and chassis environment Thermal Control for fan connectors |

| Chassis | |

| Form Factor | 8U Rackmount |

| Model | CSE-GP801TS |

| Dimensions and Weight | |

| Height | 14" (355.6 mm) |

| Width | 17.2" (437 mm) |

| Depth | 33.2" (843.28 mm) |

| Package | 29.5" (H) x 27.5" (W) x 51.2" (D) |

| Weight | Gross Weight: 225 lbs (102.1 kg) Net Weight: 166 lbs (75.3 kg) |

| Available Color | Black front & silver body |

| Front Panel | |

| LED | Hard drive activity LED Network activity LEDs Power status LED System Overheat & Power Fail LED |

| Buttons | Power On/Off button System Reset button |

| Expansion Slots | |

| PCI-Express (PCIe) Configuration | Default 8 PCIe 5.0 x16 LP slots 2 PCIe 5.0 x16 FHHL slots Option A 2 PCIe 5.0 x16 FHHL slots |

| Drive Bays / Storage | |

| Drive Bays Configuration | Default: Total 15 bays 12 front hot-swap 2.5" NVMe drive bays 3 front hot-swap 2.5" SATA drive bays Option A: Total 19 bays 12 front hot-swap 2.5" NVMe drive bays 4 front hot-swap 2.5" NVMe* drive bays 3 front hot-swap 2.5" SATA drive bays (*NVMe support may require additional storage controller and/or cables) |

| M.2 | 2 M.2 NVMe slots (M-key) |

| System Cooling | |

| Fans | 10 heavy duty fans with optimal fan speed control |

| Power Supply | |

| 6x 3000W | 6x 3000W Redundant (3 + 3) Titanium Level (96%) power supplies |

| Operating Environment | |

| Environmental Spec. | Operating Temperature: 10°C to 35°C (50°F to 95°F) Non-operating Temperature: -40°C to 60°C (-40°F to 140°F) Operating Relative Humidity: 8% to 90% (non-condensing) Non-operating Relative Humidity: 5% to 95% (non-condensing) |

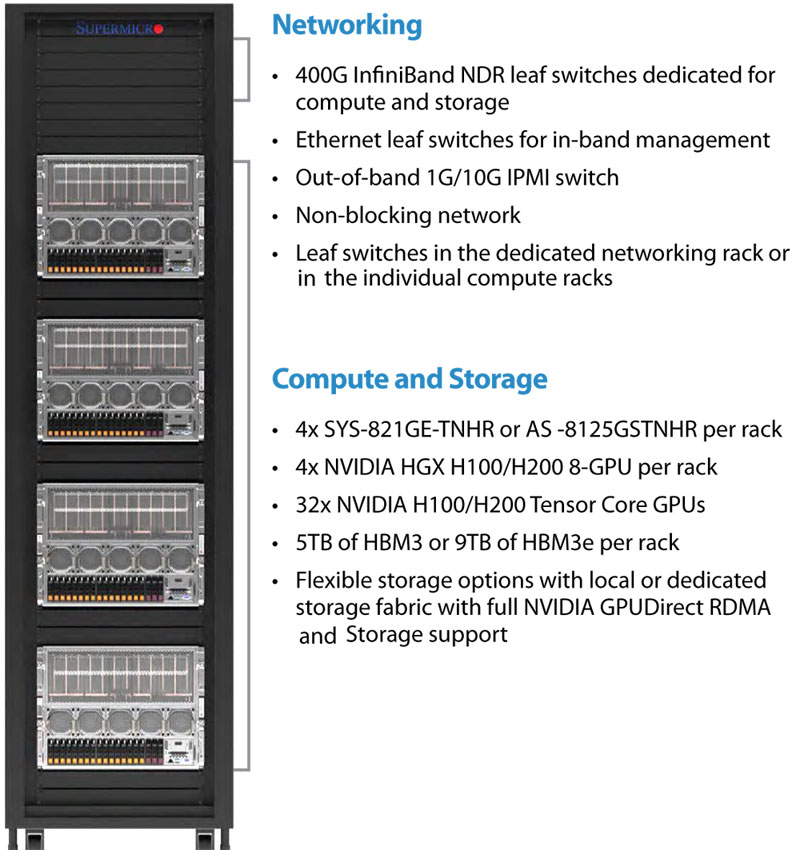

Supermicro NVIDIA HGX H100/H200

8-GPU Servers

Large-Scale AI applications demand greater computing power, faster memory bandwidth, and higher memory capacity to handle today's AI models, reaching up to trillions of parameters. Supermicro NVIDIA HGX 8-GPU Systems are carefully optimized for cooling and power delivery to sustain maximum performance of the 8 interconnected H100/H200 GPUs.

Supermicro NVIDIA HGX Systems are designed to be the scalable building block for AI clusters: each system features 8x 400G NVIDIA BlueField®-3 or ConnectX-7 NICs for a 1:1 GPU-to-NIC ratio with support for NVIDIA Spectrum-X Ethernet or NVIDIA Quantum-2 InfiniBand. These systems can be deployed in a full turn-key Generative AI SuperCluster, from 32 nodes to thousands of nodes, accelerating time-to-delivery of mission-critical AI infrastructure.

The full turn-key data center solution accelerates time-to-delivery for mission-critical enterprise use cases, and eliminates the complexity of building a large cluster, which previously was achievable only through the intensive design tuning and time-consuming optimization of supercomputing.

Proven Design Datasheet

With 32 NVIDIA HGX H100/H200 8-GPU, 8U Air-cooled Systems (256 GPUs) in 9 Racks

Key Features

- Proven industry leading architecture for large scale AI infrastructure deployments

- 256 NVIDIA H100/H200 GPUs in one scalable unit

- 20TB of HBM3 with H100 or 36TB of HBM3e with H200 in one scalable unit

- 1:1 networking to each GPU to enable NVIDIA GPUDirect RDMA and Storage for training large language model with up to trillions of parameters

- Customizable AI data pipeline storage fabric with industry leading parallel file system options

- NVIDIA AI Enterprise Software Ready

Compute Node

Compute Node

Accelerate Large Scale AI Training Workloads

Large-Scale AI training demands cutting-edge technologies to maximize parallel computing power of GPUs to handle billions if not trillions of AI model parameters to be trained with massive datasets that are exponentially growing.

Leverage NVIDIA’s HGX™ H100 SXM 8-GPU and the fastest NVLink™ & NVSwitch™ GPU-GPU interconnects with up to 900GB/s bandwidth, and fastest 1:1 networking to each GPU for node clustering, these systems are optimized to train large language models from scratch in the shortest amount of time.

Completing the stack with all-flash NVMe for a faster AI data pipeline, we provide fully integrated racks with liquid cooling options to ensure fast deployment and a smooth AI training experience.

32-Node Scalable Unit Rack Scale Design Close-up

SYS-821GE-TNHR / AS-8125GS-TNHR

| Overview | 8U Air-cooled System with NVIDIA HGX H100/H200 |

|---|---|

| CPU | Dual 5th/4th Gen Intel® Xeon® or AMD EPYC 9004 Series Processors |

| Memory | 2TB DDR5 (recommended) |

| GPU | NVIDIA HGX H100/H200 8-GPU (80GB HBM3 or 141GB HBM3E per GPU 900GB/S NVLink GPU-GPU Interconnect with NVLink |

| Networking | 8x NVDIA ConnectX®-7 Single-port 400Gbps/NDR OSFP NICs 2x NVDIA ConnectX®-7 Dual-port 200Gbps/NDR200 OSFP112 NICs |

| Storage | 30.4TB NVMe (4x 7.6TB U.3) 3.8TB NVMe (2x 1.9TB U.3, Boot) [Optional M.2 available] |

| Power Supply | 6x 3000W Redundant Titanium Level power supplies |