Supermicro NVIDIA Blackwell Architecture Solutions: Transformative AI Data Center Building Blocks

Your NVIDIA Blackwell Journey Starts Here

In this transformative moment of AI, where the evolving scaling laws continue to push limits of data center capabilities, our latest NVIDIA Blackwell-powered solutions, developed through close collaboration with NVIDIA, offers unprecedented computational performance, density, and efficiency with next-generation air-cooled and liquid-cooled architecture.

With our readily deployable AI Data Center Building Block solutions, Supermicro is your premier partner to start your NVIDIA Blackwell journey, providing sustainable, cutting-edge solutions that accelerate AI innovations.

End-to-End AI Data Center Building Block Solutions Advantage

A broad range of air-cooled and liquid-cooled systems with multiple CPU options, a full data center management software suite, turn-key rack level integration with full networking, cabling, and cluster level L12 validation, global delivery, support and service.

The Most Powerful and Efficient NVIDIA Blackwell Architecture Solutions

Vast Experience

Supermicro’s AI Data Center Building Block Solutions power the largest liquid-cooled AI Data Center deployment in the World.

Flexible Offerings

Air or liquid-cooled, GPU-optimized, multiple system and rack form factors, CPUs, storage, networking options. Optimizable for your needs.

Fast Time to Online

Accelerated delivery with global capacity, world class deployment expertise, one-site services, to bring your AI to production, fast.

Liquid-Cooling Pioneer

Proven, scalable, and plug-and-play liquid-cooling solutions to sustain the AI revolution. Designed specifically for NVIDIA Blackwell Architecture.

Next-Gen Liquid-Cooled Servers

Up to 96 NVIDIA HGX™ B200 GPUs in a Single Rack

with Maximum Scalability and Efficiency Datasheet

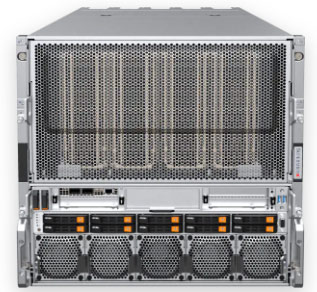

The new liquid-cooled 4U NVIDIA HGX B200 8-GPU system features newly developed cold plates and advanced tubing design paired with the new 250kW coolant distribution unit (CDU) more than doubling the cooling capacity of the previous generation in the same 4U form factor. The new architecture further enhances efficiency and serviceability of the predecessor that are designed for NVIDIA HGX H100/H200 8-GPU.

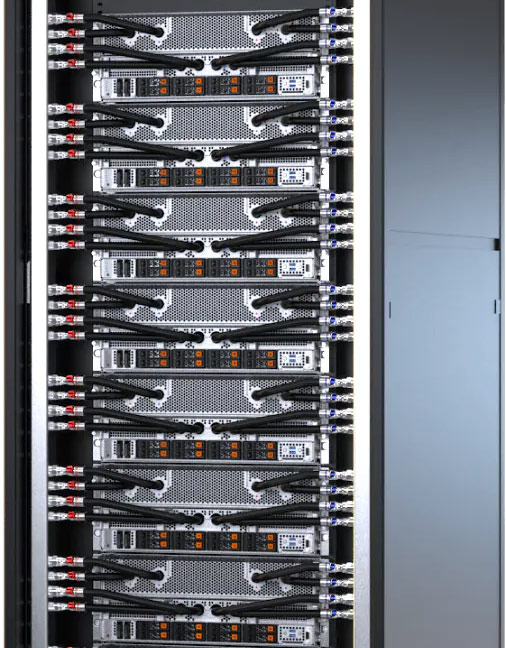

Available in 42U, 48U or 52U configuration, the rack scale design with the new vertical coolant distribution manifolds (CDM) means that horizontal manifolds no longer occupy valuable rack units. This enables 8 systems, 64 NVIDIA Blackwell GPUs in a 42U rack and all the way up to 12 systems with 96 NVIDIA GPUs in a 52U rack.

4U Liquid-cooled Server for NVIDIA HGX B200 8-GPU

Maximum Density, Performance and Efficiency with Next-Gen Liquid-cooling for frontier model training and Inference

Air-Cooled Server, Evolved

The Best Selling Air-cooled System

Optimized for NVIDIA Blackwell Architecture Datasheet

The new air-cooled 10U NVIDIA HGX B200 system features a redesigned chassis with expanded thermal headroom to accommodate eight 1000W TDP Blackwell GPUs. Up to 4 of the new 10U air-cooled systems can be installed and fully integrated in a rack, the same density as the previous generation, while providing up to 15x inference and 3x training performance.

All Supermicro NVIDIA HGX B200 systems are equipped with a 1:1 GPU-to-NIC ratio supporting NVIDIA BlueField®-3 or NVIDIA ConnectX®-7 for scaling across a high-performance compute fabric.

10U Air-cooled System for NVIDIA HGX B200 8-GPU

Industry-leading Air-cooling Design for large language model training and high volume inference

An Exascale of Compute in a Rack

End-to-end Liquid-cooling Solution for NVIDIA GB200 NVL72

Optimized for NVIDIA Blackwell Architecture Datasheet

Supermicro’s GB200 NVL72 solution represents a breakthrough in AI computing infrastructure, combining Supermicro’s end-to-end liquid-cooling technology. It enables up to 25x performance improvement at the same power envelope compared to previous NVIDIA Hopper generations, while reducing data center electricity costs by up to 40%.

The system integrates 72 NVIDIA Blackwell GPUs and 36 NVIDIA Grace CPUs in a single rack, delivering exascale computing capabilities through NVIDIA’s most extensive NVLink™ network to date, achieving 130 TB/s of GPU communications.

The 48U solution’s versatility supports both liquid-to-air and liquid-to-liquid cooling configurations, accommodating various data center environments.

NVIDIA GB200 NVL72 SuperCluster for NVIDIA GB200 Grace™ Blackwell Superchip

Liquid-to-Air Solution for NVIDIA GB200 NVL72

Featured Resources

NVIDIA Blackwell Architecture Solutions

Transformative AI Data Center Building Blocks

Liquid-Cooled AI SuperCluster

with 256 NVIDIA HGX™ B200 GPUs, 32 4U Liquid-cooled Systems

Air-Cooled AI SuperCluster

with 256 NVIDIA HGX™ B200 GPUs, 32 4U Air-cooled Systems

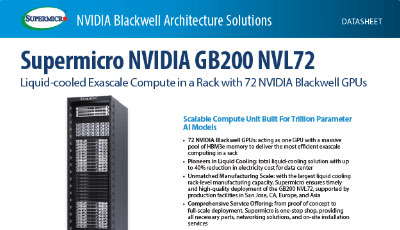

Supermicro NVIDIA GB200 NVL72

Liquid-cooled Exascale Compute in a Rack with 72 NVIDIA Blackwell GPUs