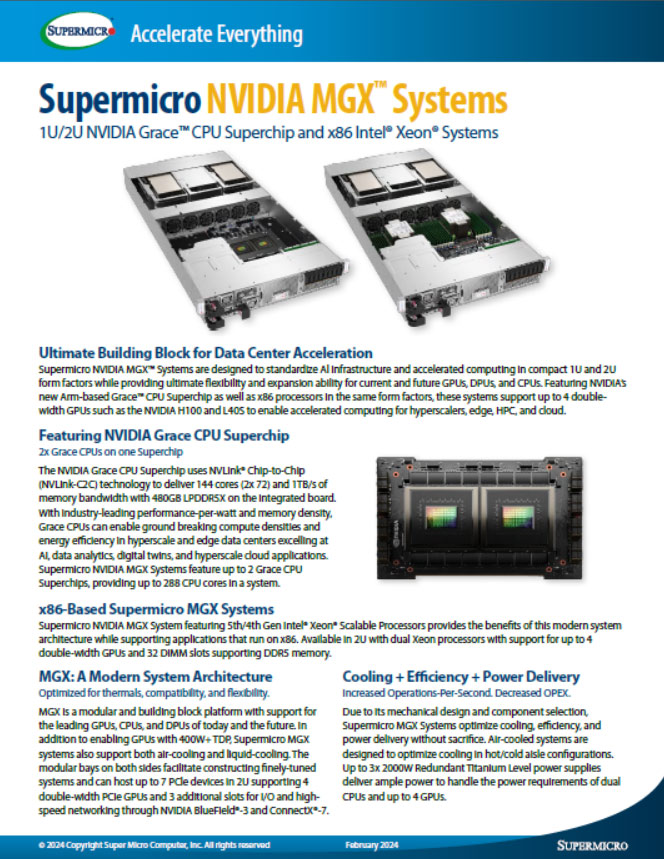

Supermicro NVIDIA MGX™ Systems for Generative AI and Beyond

Accelerate Time to Market for Generative AI and Beyond

Construct tailored AI solutions with Supermicro NVIDIA MGX™ Systems, featuring the latest NVIDIA GH200 Grace Hopper™ Superchip and NVIDIA Grace™ CPU Superchip. The new modular architecture is designed to standardize AI infrastructure and accelerated computing in compact 1U and 2U form factors while providing ultimate flexibility and expansion ability for the present and future GPUs, DPUs, and CPUs.

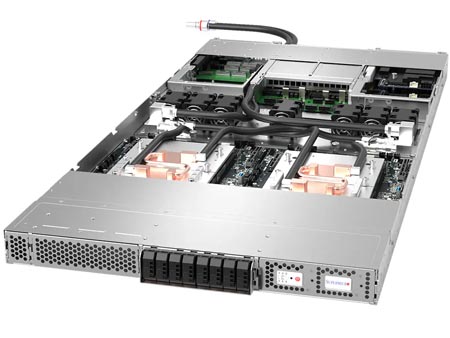

Supermicro’s advanced liquid-cooling technology enables power-efficient hyper-dense configurations, such as a 1U 2-node system with 2 NVIDIA GH200 Grace Hopper Superchips, each comprising of a single NVIDIA H100 Tensor Core GPU and an NVIDIA Grace CPU, integrated with a high-speed interconnect. Supermicro delivers thousands of rack-scale AI clusters per month from facilities around the world, ensuring plug and play compatibility.

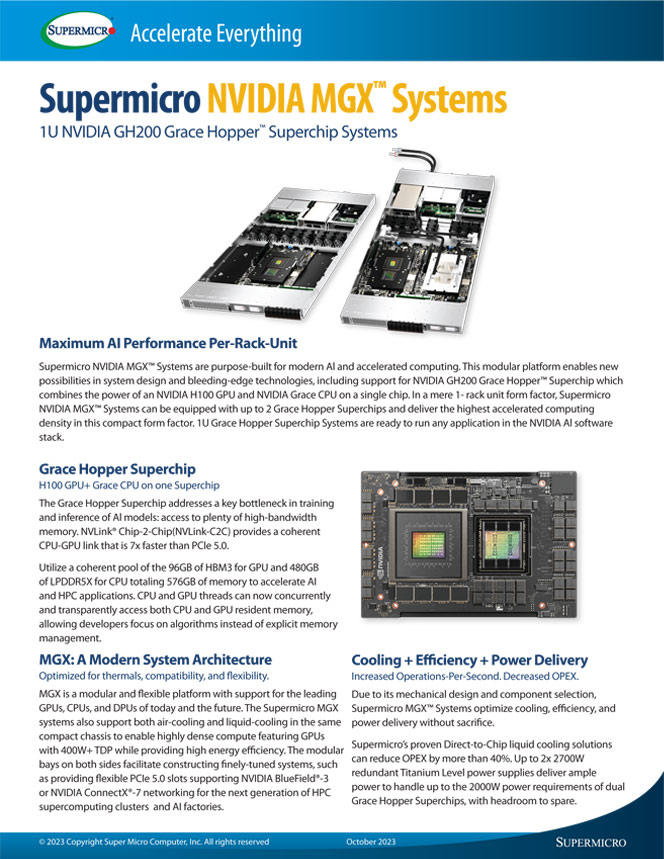

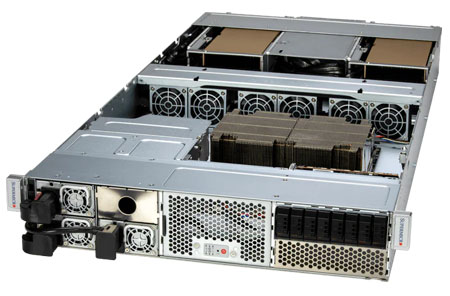

1U/2U NVIDIA Grace™ CPU Superchip and x86 Intel® Xeon® Systems

Featuring NVIDIA Grace CPU Superchip. x86-Based Supermicro MGX Systems

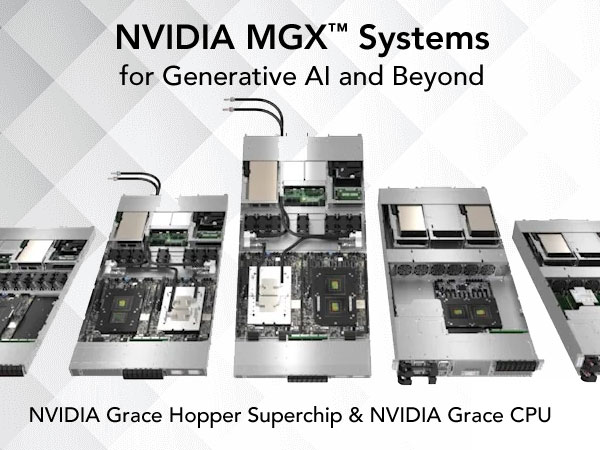

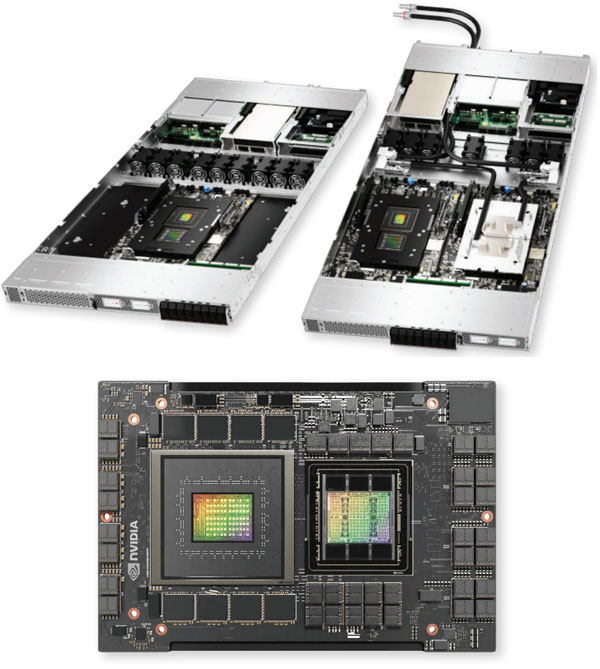

1U NVIDIA GH200 Grace Hopper™ Superchip Systems

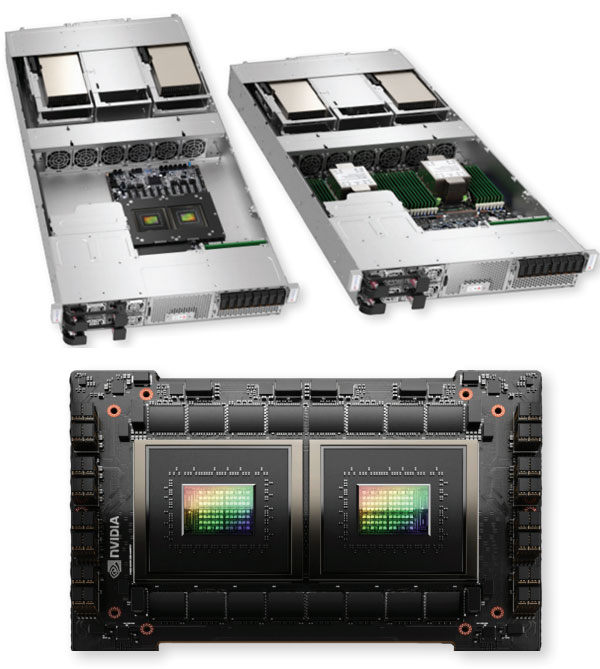

Grace Hopper Superchip: H100 GPU+ Grace CPU on one Superchip

Ultimate Building Block for Data Center

Acceleration Supermicro NVIDIA MGX™ Systems are designed to standardize Al infrastructure and accelerated computing in compact 1U and 2U form factors while providing ultimate flexibility and expansion ability for current and future GPUs, DPUs, and CPUs.

Featuring NVIDIA’s new Arm-based Grace™ CPU Superchip as well as x86 processors in the same form factors, these systems support up to 4 doublewidth GPUs such as the NVIDIA H100 and L40S to enable accelerated computing for hyperscalers, edge, HPC, and cloud.

Featuring NVIDIA Grace CPU Superchip: 2x Grace CPUs on one Superchip

The NVIDIA Grace CPU Superchip uses NVLink® Chip-to-Chip (NVLink-C2C) technology to deliver 144 cores (2x 72) and 1TB/s of memory bandwidth with 480GB LPDDR5X on the integrated board.

With industry-leading performance-per-watt and memory density, Grace CPUs can enable ground breaking compute densities and energy efficiency in hyperscale and edge data centers excelling at AI, data analytics, digital twins, and hyperscale cloud applications. Supermicro NVIDIA MGX Systems feature up to 2 Grace CPU Superchips, providing up to 288 CPU cores in a system.

| SKU | ARS-121L-DNR | ARS-221GL-NR | SYS-221GE-NR |

|  |  | |

| Form Factor | 1U 2-node system with NVIDIA Grace CPU Superchip per node | 2U GPU system with single NVIDIA Grace CPU Superchip | 2U GPU system with dual x86 CPUs |

| CPU | 144-core Grace Arm Neoverse V2 CPU in a single chip per node (total of 288 cores in one system) | 144-core Grace Arm Neoverse V2 CPU in a single chip | 4th Gen Intel Xeon Scalable Processors (Up to 56-core per socket) |

| GPU | Please contact our sales for possible configurations | Up to 4 double-width GPUs including NVIDIA H100 PCIe, H100 NVL, L40S | Up to 4 double-width GPUs including NVIDIA H100 PCIe, H100 NVL, L40S |

| Memory | Up to 480GB of integrated LPDDR5X memory with ECC and up to 1TB/s of bandwidth per node | Up to 480GB of integrated LPDDR5X memory with ECC and up to 1TB/s of bandwidth per node | Up to 2TB, 32x DIMM slots, ECC DDR5-4800 |

| Drives | Up to 4x hot-swap E1.S drives and 2x M.2 NVMe drives per node | Up to 8x hot-swap E1.S drives and 2x M.2 NVMe drives | Up to 8x hot-swap E1.S drives and 2x M.2 NVMe drives |

| Networking | 2x PCIe 5.0 x16 slots per node supporting NVIDIA BlueField-3 or ConnectX-7 (e.g., 1 GPU and 1 BlueField-3) | 3x PCIe 5.0 x16 slots supporting NVIDIA BlueField-3 or ConnectX-7 (in addition to 4x PCIe 5.0 x16 slots for GPUs) | 3x PCIe 5.0 x16 slots supporting NVIDIA BlueField-3 or ConnectX-7 (in addition to 4x PCIe 5.0 x16 slots for GPUs) |

| Interconnect | NVLink™-C2C with 900GB/s for CPU-CPU interconnect (within node) | NVLink Bridge GPU-GPU interconnect supported (e.g., H100 NVL) | NVLink™ Bridge GPU-GPU interconnect supported (e.g., H100 NVL) |

| Cooling | Air-cooling | Air-cooling | Air-cooling |

| Power | 2x 2700W Redundant Titanium Level power supplies | 3x 2000W Redundant Titanium Level power supplies | 3x 2000W Redundant Titanium Level power supplies |

Maximum AI Performance Per-Rack-Unit

Supermicro NVIDIA MGX™ Systems are purpose-built for modern Al and accelerated computing. This modular platform enables new possibilities in system design and bleeding-edge technologies, including support for NVIDIA GH200 Grace Hopper™ Superchip which combines the power of an NVIDIA H100 GPU and NVIDIA Grace CPU on a single chip.

In a mere 1- rack unit form factor, Supermicro NVIDIA MGX™ Systems can be equipped with up to 2 Grace Hopper Superchips and deliver the highest accelerated computing density in this compact form factor. 1U Grace Hopper Superchip Systems are ready to run any application in the NVIDIA Al software stack.

Grace Hopper Superchip: H100 GPU+ Grace CPU on one Superchip

Grace Hopper Superchip addresses a key bottleneck in training and inference of Al models: access to high-bandwidth memory. NVLink® Chip-2-Chip(NVLink-C2C) provides a coherent CPU-GPU link that is 7x faster than PCle 5.0.

Utilize a coherent pool of the 96GB of HBM3 for GPU and 480GB of LPDDR5X for CPU totaling 576GB of memory to accelerate AI and HPC applications. CPU and GPU threads can now concurrently and transparently access both CPU and GPU resident memory, allowing developers focus on algorithms instead of explicit memory management.

| SKU | ARS-111GL-NHR | ARS-111GL-NHR-LCC | ARS-111GL-DNHR-LCC |

|  1 Node with Liquid Cooling |  2 Nodes with Liquid Cooling | |

| Form Factor | 1U system with single NVIDIA Grace Hopper Superchip (air-cooled) | 1U system with single NVIDIA Grace Hopper Superchip (liquid-cooled) | 1U 2-node system with NVIDIA Grace Hopper Superchip per node (liquid-cooled) |

| CPU | 72-core Grace Arm Neoverse V2 CPU + H100 Tensor Core GPU in a single chip | 72-core Grace Arm Neoverse V2 CPU + H100 Tensor Core GPU in a single chip | 2x 72-core Grace Arm Neoverse V2 CPU + H100 Tensor Core GPU in a single chip (1 per node) |

| GPU | NVIDIA H100 Tensor Core GPU with 96GB of HBM3 or 144GB of HBM3e (coming soon) | NVIDIA H100 Tensor Core GPU with 96GB of HBM3 or 144GB of HBM3e | NVIDIA H100 Tensor Core GPU with 96GB of HBM3 or 144GB of HBM3e per node |

| Memory | Up to 480GB of integrated LPDDR5X with ECC (Up to 480GB + 144GB of fast-access memory) | Up to 480GB of integrated LPDDR5X memory with ECC (Up to 480GB + 144GB of fast-access memory) | Up to 480GB of LPDDR5X per node (Up to 480GB + 144GB of fast-access memory per node) |

| Drives | 8x Hot-swap E1.S drives and 2x M.2 NVMe drives | 8x Hot-swap E1.S drives and 2x M.2 NVMe drives | 8x Hot-swap E1.S drives and 2x M.2 NVMe drives |

| Networking | 3x PCIe 5.0 x16 slots supporting NVIDIA BlueField-3 or ConnectX-7 | 3x PCIe 5.0 x16 slots supporting NVIDIA BlueField-3 or ConnectX-7 | 2x PCIe 5.0 x16 slots per node supporting NVIDIA BlueField-3 or ConnectX-7 |

| Interconnect | NVLink-C2C with 900GB/s for CPU-GPU interconnect | NVLink-C2C with 900GB/s for CPU-GPU interconnect | NVLink-C2C with 900GB/s for CPU-GPU interconnect |

| Cooling | Air-cooling | Liquid-cooling | Liquid-cooling |

| Power | 2x 2000W Redundant Titanium Level power supplies | 2x 2000W Redundant Titanium Level power supplies | 2x 2700W Redundant Titanium Level power supplies |