Supermicro AI Servers with NVIDIA GH200 Grace Hopper™, NVIDIA H200 & NVIDIA H100 Tensor Core GPU

Accelerating AI adoption with NVIDIA and Supermicro: Best-in-class technology for optimal performance

While the adoption of AI is accelerating, selecting the right AI infrastructure is easier said than done. With a variety of systems and architectures available, organizations must identify solutions that align with their unique demands and budget constraints.

To meet current and future demands, organizations must balance performance, scalability, and cost when selecting their AI infrastructure, and aligning the right infrastructure to your key workloads is critical. Understanding how these core workloads and infrastructure choices align with critical applications and selecting the right systems for the job can enhance performance, efficiency, and the long-term success of these solutions.

Supermicro servers purpose built for supporting AI adoption and expansion. Each system is powered by a combination of NVIDIA’s top-of-the line NVIDIA GH200 Grace Hopper Superchip, the NVIDIA H200 Tensor Core GPU, or the NVIDIA H100 Tensor Core GPU.

Supermicro Servers featuring NVIDIA’s GH200 Grace Hopper Superchip

For workloads that require high compute power, extensive memory capacity, and seamless data sharing—leveraging a unified CPU+GPU memory model architecture can enable faster data access and improved efficiency for these data-intensive tasks. Additionally, the NVIDIA GH200 delivers outstanding performance in LLM, RAGs, GNNs, HPC, making it ideal for scenarios that require real-time insights and large-scale data processing.

Integrated with Supermicro’s infrastructure, the NVIDIA GH200 Grace Hopper Superchip offers transformative performance for AI and HPC workloads. By combining an ARM-based* CPU with GPU-accelerated computing and high-bandwidth memory, it provides AI-driven applications and similar workloads the power and efficiency they need to perform at scale.

Supermicro servers with the NVIDIA GH200 Grace Hopper Superchip

- ARS-221GL-NHIR

- CPU: 72-core NVIDIA Grace CPU on GH200 Grace Hopper™ Superchip

- Max GPU Count: Up to 2 onboard GPUs

- Supported GPU: NVIDIA Hopper Tensor Core GPU on GH200 Grace Hopper™ Superchip

- Air-cooled

- ARS-111GL-NHR

- CPU: 72-core NVIDIA Grace CPU on GH200 Grace Hopper™ Superchip

- Max GPU Count: Up to 1 onboard GPU

- Supported GPU: NVIDIA Hopper Tensor Core GPU on GH200 Grace Hopper™ Superchip

- Air-cooled

- ARS-111GL-NHR-LCC

- CPU: 72-core NVIDIA Grace CPU on GH200 Grace Hopper™ Superchip

- Max GPU Count: Up to 1 onboard GPU

- Supported GPU: NVIDIA Hopper Tensor Core GPU on GH200 Grace Hopper™ Superchip

- Air and liquid cooled

- ARS-111GL-DNHR-LCC

- CPU: 72-core NVIDIA Grace CPU on GH200 Grace Hopper™ Superchip

- Max GPU Count: Up to 1 onboard GPU (per node)

- Supported GPU: NVIDIA Hopper Tensor Core GPU on GH200 Grace Hopper™ Superchip

- Air and liquid cooled

Supermicro servers featuring NVIDIA’s H200 and H100 Tensor Core GPUs

In environments where robust computing power is essential for managing vast datasets, executing complex algorithms, and enabling real-time data processing, hardware designed for parallel processing, high GPU density, and advanced tensor core acceleration plays a critical role. These features are vital for reducing training times and enhancing model accuracy in tasks such as deep learning model training and high-performance simulations.

The NVIDIA H100 Tensor Core GPU enables an order-of-magnitude leap for large-scale AI and HPC with extraordinary performance, scalability, and security for every data center and includes the NVIDIA AI Enterprise software suite to streamline AI development and deployment. With NVIDIA fourth generation NVLink™, H100 accelerates exascale workloads with a dedicated Transformer Engine for trillion parameter language models. For small jobs, H100 can be partitioned down to right

sized Multi-Instance GPU (MIG) partitions. With Hopper Confidential Computing, this scalable compute power can secure sensitive applications on shared data center infrastructure.

The NVIDIA H200 Tensor Core GPU builds upon the groundbreaking capabilities of the H100, offering everything the H100 delivers and more. With features such as Multi-Instance GPU (MIG), NVLink®, and the Transformer Engine, the H200 ensures the same unparalleled versatility for AI and HPC workloads while pushing boundaries further. The biggest leap forward lies in the HBM3e memory—faster and larger than its predecessor—fueling the acceleration of generative AI, Large Language Models (LLMs), and scientific computing for HPC workloads. NVIDIA HGX™ H200 delivers record breaking performance—an eight-way HGX H200 delivers over 32 petaflops of FP8 deep learning compute and 1.1 terabytes (TB) of aggregate high-bandwidth memory, setting a new benchmark for generative AI and HPC applications. Combined with Supermicro’s highly flexible building block architecture for AI infrastructure, these NVIDIA accelerated computing platforms deliver exceptional performance for AI and high-performance computing workloads. With advanced GPU

Supermicro servers with NVIDIA H200 and H100 Tensor Core GPUs

- SYS-821GE-TNHR

- CPU: 5th/4th Gen Intel® Xeon® Dual processor

- Max GPU Count: Up to 8 onboard GPUs

- Supported GPU: NVIDIA HGX H100 8 GPU (80GB), HGX H200 8-GPU (141GB)

- Air-cooled

- AS-8125GS-TNHR

- CPU: AMD EPYC™ 9004/9005 Dual processor

- Max GPU Count: Up to 8 onboard GPUs

- Supported GPU: NVIDIA SXM: HGX H100 8-GPU (80GB), HGX H200 8-GPU (141GB)

- Air-cooled

- SYS-421GE-TNHR2-LCC

- CPU: 5th/4th Gen Intel® Xeon® Dual processor

- Max GPU Count: Up to 8 onboard GPUs

- Supported GPU: NVIDIA SXM: HGX H100 8-GPU (80GB), HGX H200 8-GPU (141GB)

- Liquid-cooled

- AS-4125GS-TNHR2-LCC

- CPU: AMD EPYC™ 9004/9005 Dual processor

- Max GPU Count: Up to 8 onboard GPUs

- Supported GPU: NVIDIA SXM: HGX H100 8-GPU (80GB), HGX H200 8-GPU (141GB)

- Liquid cooled

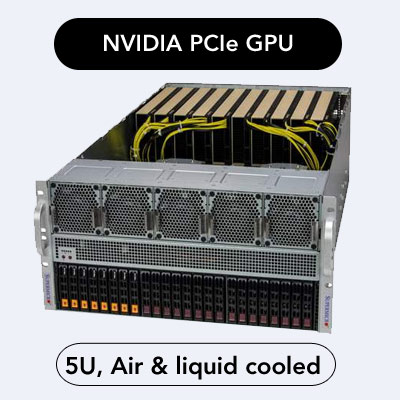

- SYS-521GE-TNRT

- CPU: 5th/4th Gen Intel® Xeon® Dual processor

- Max GPU Count: Up to 10 double-width or 10 single-width GPUs

- Supported GPU: NVIDIA PCIe: H100 NVL, H100, L40S, A100

- Air and liquid cooled

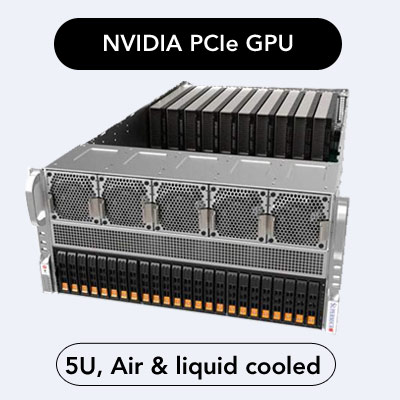

- SYS-522GA-NRT

- CPU: Dual Socket Intel® Xeon® 6900 series processors with P-cores

- Max GPU Count: Up to 10 double-width or 10 single-width GPUs

- Supported GPU: NVIDIA PCIe: H100 NVL, L40S, L4

- Air and liquid cooled

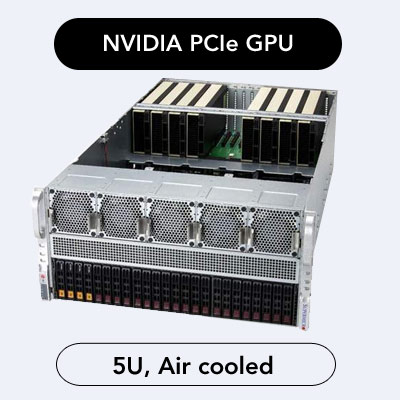

- AS-5126GS-TNRT

- CPU: AMD EPYC™ 9004/9005 Dual processor

- Max GPU Count: Up to 8 double-width GPUs

- Supported GPU: NVIDIA PCIe: H100 NVL, H200 NVL (141GB), L40S

- Air cooled

- ARS-221GL-NR

- CPU: Dual 72-core CPUs on a NVIDIA Grace CPU Superchip

- Max GPU Count: Up to 2 double width GPUs

- Supported GPU: NVIDIA PCIe: H100 NVL, L40S

- Air cooled

GPU interconnectivity, high GPU compute density per system and rack, high scalability, and optimized NVIDIA AI Enterprise software libraries, frameworks and toolsets, these systems are designed to accelerate deep learning model training, large-scale simulations, and data analysis, all within scalable, energy-efficient systems that can be tailored to specific workloads. Supermicro offers system options for both the NVIDIA HGX and NVIDIA PCIe (Peripheral Component Interconnect Express) GPUs, allowing organizations to easily adopt and scale based on existing infrastructure.